Designing a Better Course Planning Experience

Scenario/Context

Who this is for: South Fayette Township School District is a public school district encompassing both middle and high school, with a focus on "future-focused, innovative, diverse, and high-quality learning opportunities." They chose to partner with Carnegie Mellon University's Master of Human-Computer Interaction (MHCI) program for a capstone project to put a team of four onto their requested task.

Team: Myself, Erin Sawyer, Vivian Li, and Lori Chen

Task

The task we were given focused around reframing and redesigning the course catalog (or Program of Studies), both as an artifact and as an experience.

The requirements as outlined in the initial project brief:

- Design an innovative course catalog to ensure descriptions are always current, highly usable on multiple devices, and are tailored to support student exploration and decisions about courses and careers

- Apply social learning theory, cognitive biases and methods of designing for behavior change to encourage students consider and choose courses outside of friend groups

- Collect and visualize data about usage by many stakeholders including students, guidance counselors, faculty, parents and other stakeholders by designing instrumentation for a next gen course catalog

- Capture student longitudinal sentiment about courses at enrollment, during delivery, completion and at graduation to understand impact on students between grades and after secondary education

Action

Note: Because I know your time is limited, this is the short version. For the version with the details and our more specific methods, please check out the following resources:

...or talk to me about it!

________________________

Starting Research

________________________

Through interviews and research with students, teachers, advisors, and counselors, our team narrowed the client's main issues down to a set of two main statements:

- The Program of Study has valuable information, but it's underutilized by students.

- Students appreciate the variety of course and opportunity choices available to them, but the current form of the course catalog (a pdf, hundreds of pages long and parked on a portion of their website that few, if any, students check) makes variety a burden.

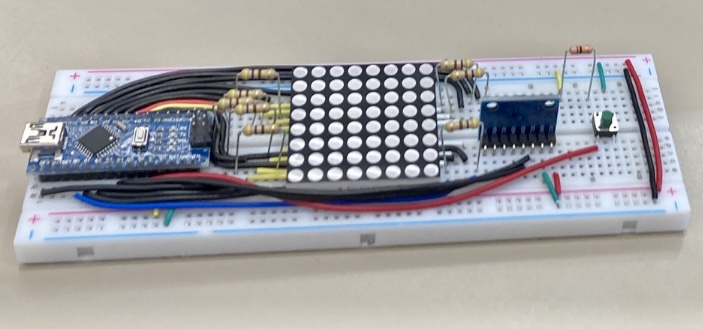

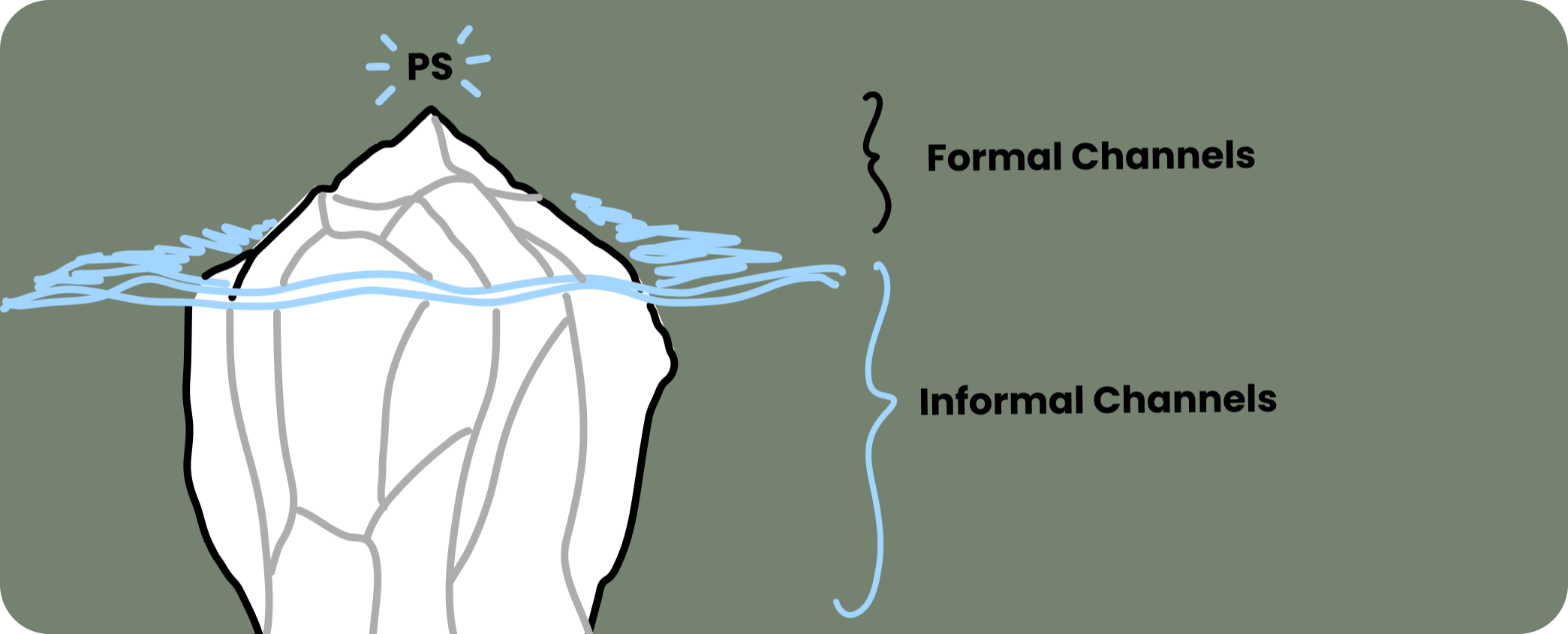

Further, we noticed a few other key facts. South Fayette uses PowerSchool for course registration, and even though there is space to put course information, they don't use it and students don't notice it's there. Using a separate system for registration and course exploration creates a gap between where documented information exists and where it is actually being used. So students aren't using the Program of Studies for information on courses, and they don't get that information from PowerSchool. Where do they get their ideas for classes and career?

As it turns out, informal channels of communication (conversations with teachers/peers/family/others) significantly enhances the range of information guiding students' decisions. At the end of the day, it turns out that much of this is a conversation about formal versus informal information sources and what constitutes reliable, helpful information.

________________________

Things We Learned and Focus Areas

________________________

Effective educational systems should be dynamic, not just for a diverse set of users, but also for a rapidly changing future.

- We realized that there is misalignment between the pace of education updates and the pace at which the future that education prepared students for is changing.

- To keep up with this, information and the systems through which it is communicated should be constantly created/maintained by and for the different stakeholders.

In our case, we primarily focus on students, but this is absolutely not to discount teachers or counselors as part of the mix. They need to know what to teach and advise for, so they need to be just as up-to-date.

Educational interfaces should inspire human connection.

- Teachers found that process of helping students find their passions and future careers to be the most rewarding part of the job - we shouldn't be changing that.

There is an information gap between students, teachers, and counselors.

- While inspiring human connection and supporting information transfer is a goal, there is a potential issue when students may have incorrect information, teachers might not know what current job or college markets are looking for, and counselors might be out of touch with student goals and personalities. Our responsibility is to work to fix or bridge this gap.

And our guiding principle in this project: Our solution should not just reinforce the current system more efficiently. Our solution might take many forms (the project ultimately ends in late July, 2024), but one thing it WILL NOT BE is a prettier PDF, maybe with filtering capabilities.

So we landed on three core opportunity areas to address with our design:

Identity + Confidence

- We believe that students who are confident in themselves will make better decisions that feel authentic to them given the information they have, and will be better able to adapt to change, even when those decisions do not work out.

- When students have not formed a holistic sense of self-identity that they are confident in, they tend to tie self-identity to their academic performance. High school is turning into a constant game of comparison, to the point that some students may be cutting themselves off from opportunities early because they think they might fail or might be outshone.

- High school should be re-framed as an opportunity for growth, rather than something to optimize or a system to game.

Perception of Value

- We believe that students' current perception of course value may limit them from having a fuller educational journey.

- Students tend to assign higher value to courses based on perceived direct benefit to their future ("will this help me get into a prestigious college?") and may weed out more exploratory courses they could be taking to explore interests.

- This might incentivize students more to take courses which may not be a good fit for them, or which they may not be prepared for.

- This sense of value might be reinforced to an extent by some level of truth, but students may go overboard with optimization according to their perception of value and not actual value as defined by post-high school outcomes.

Community + Belonging

- We believe that students who can effectively engage with their community while remaining true to themselves will adopt a bigger-picture view that will aid in decision-making.

- Students engage with their personal networks for help selecting courses. Organic interactions are key to building those, and students seek out guidance that they feel is most authentic to them.

- Relying on personal networks may be a double-edged sword due to inviting bias, misinformation, and sources of pressure if one is not careful.

Career Thinking

- We believe that by implementing interactive and experiential learning opportunities that bridge the gap between courses, career paths, and real-world contexts, students will be more engaged in exploring career connections.

- High school is a great opportunity for students to explore interests and career paths in a safe environment, but students are not necessarily taking advantage of it.

- When students have a goal in mind, they can better see connections between school and their intended career.

- Students need enough guidance to support exploration and development, without being so prescriptive that it limits them.

We explored these opportunity areas with four prototypes, each addressing different combinations of opportunities in different formats:

- A collaborative course catalog, where students offer reviews, tags, images, workload estimates, and other information to help peers

- A personal profile system, focused around understanding how a student's self-identity might correspond with career exploration through use of Holland RIASEC attributes and analysis of strengths and gaps to be filled for educational goals

- Swipe-to-match courses, focused on rapid exploration of courses and testing formats to encourage students to see courses with less bias or in different ways than usual

- A career shift simulator card game, where students explore career thinking and flexibility by picking careers and deciding how they would approach dealing with (often outlandish) life situations that might cause a pivot

Elements of each of these made it into our final main prototype for the Spring semester.

Results and Summary

This will be updated after Summer, 2024.

For now, our main prototype is based around offering an interactive tool that lets students build and visualize a personal profile, offering dynamic exploration of interests and courses. With a speed dating-style setup, it offers personalized recommendations and engaging information, helping students quickly check out different courses or dive deeper into the ones they like.

Prototype images and demos coming soon.